Image recognition is the ability of computers to identify and classify specific objects, places, people, text and actions within digital images and videos. As an application of computer vision, image recognition software works by analyzing and processing the visual content of an image or video and comparing it to learned data, allowing the software to automatically “see” and interpret what is present, the way a human might be able to.

What Is Image Recognition?

Image recognition is an application of computer vision in which machines identify and classify specific objects, people, text and actions within digital images and videos. Essentially, it’s the ability of computer software to “see” and interpret things within visual media the way a human might.

Image recognition is an integral part of the technology we use every day — from the facial recognition feature that unlocks smartphones to mobile check deposits on banking apps. It’s also commonly used in areas like medical imaging to identify tumors, broken bones and other aberrations, as well as in factories to detect defective products on the assembly line.

How Does Image Recognition Work?

To understand how image recognition works, it’s important to first define digital images.

A digital image is composed of picture elements, or pixels, which are organized spatially into a two-dimensional grid or array. Each pixel has a numerical value that corresponds to its light intensity, or gray level, explained Jason Corso, a professor of robotics at the University of Michigan and co-founder of computer vision startup Voxel51.

Using image recognition, a computer vision system can recognize patterns and regularities in all that numerical data that correspond to things like people, vehicles or tumors. It essentially automates the innate human ability to look at an image, identify objects within it and respond accordingly. (A human retina can process about 10 one-million-point images per second, which get parsed by the brain so we can quickly assimilate, contextualize and react to what we are seeing.)

“Computer vision is basically doing the brain’s share of the work,” Corso told Built In. “It’s really complex.”

These days, image recognition is based on deep learning — a subcategory of machine learning that uses multi-layered structures of algorithms called neural networks to continually analyze data and draw conclusions about it, similar to the way the human brain works. In the case of image recognition, neural networks are fed with as many pre-labeled images as possible to “teach” them how to recognize similar images.

The process is typically broken down into three distinct steps:

1. A Data Set Is Gathered

After a massive data set of images and videos has been created, it must be analyzed and annotated with any meaningful features or characteristics. For instance, a dog image needs to be identified as a “dog.” And if there are multiple dogs in one image, they need to be labeled with tags or bounding boxes, depending on the task at hand.

2. The Neural Network is Fed and Trained

Next, a neural network is fed and trained on these images. As with the human brain, the machine must be taught to recognize a concept by showing it many different examples. If the data has all been labeled, supervised learning algorithms are used to distinguish between different object categories (a cat versus a dog, for example). If the data has not been labeled, the system uses unsupervised learning algorithms to analyze the different attributes of the images and determine the important similarities or differences between the images.

For tasks concerned with image recognition, convolutional neural networks (CNNs) are best because they can automatically detect significant features in images without any human supervision.

To accomplish this, CNNs have different layers. The first, known as a convolutional layer, applies filters (also known as kernels) to a batch of input images to scan their pixels and mathematically compare the colors and shapes of the pixels, extracting important features or patterns from the images like edges and corners.

The CNN then uses what it learned from the first layer to look at slightly larger parts of the image, making note of more complex features. It keeps doing this with each layer, looking at bigger and more meaningful parts of the picture until it decides what the picture is showing based on all the features it has found.

“In deep learning, you don’t need hand-engineered features. You just need large amounts of data. And the model is deep enough that the early layers actually extract useful features first, and then classify what the object is,” Vikesh Khanna, chief technology officer and co-founder of Ambient.ai, told Built In. “The features are actually learned by the model itself.”

3. Inferences Are Converted Into Actions

Once an image recognition system has been trained, it can be fed new images and videos, which are then compared to the original training dataset to make predictions. This is what allows it to assign a particular classification to an image, or indicate whether a specific element is present.

The system then converts those into inferences that can be put into action: A self-driving car detects a red light and comes to a stop; a security camera identifies a weapon being drawn and sends an alert.

Image Recognition Examples and Use Cases

With image recognition, a machine can identify objects in a scene just as easily as a human can — and often faster and at a more granular level. And once a model has learned to recognize particular elements, it can be programmed to perform a particular action in response, making it an integral part of many tech sectors.

Image Search

Searching for images requires image recognition, whether it is done using text or visual inputs.

For instance, Google Lens allows users to conduct image-based searches in real time. So, if someone finds an unfamiliar flower in their garden, they can simply take a photo of it and use the app to not only identify it, but get more information about it. Google also uses optical character recognition to “read” text in images and translate it into different languages.

Meanwhile, Vecteezy, an online marketplace of photos and illustrations, implements image recognition to help users more easily find the image they are searching for — even if that image isn’t tagged with a particular word or phrase.

“It really understands the nuance and stuff inside the image in a much better way,” Vecteezy’s chief technology officer Adam Gamble told Built In. “You can go in with these really long, descriptive phrases in our search, like, ‘a woman at a cafe, drinking coffee, laughing with her friends and wearing a hat,’ and then it will find that for you, even though that image may not be tagged with all those different things.”

Medical Diagnosis

Image recognition plays a crucial role in medical imaging analysis, allowing healthcare professionals and clinicians to more easily diagnose and monitor certain diseases and conditions. It can assist in detecting abnormalities in medical scans such as MRIs and X-rays, even when they are in their earliest stages.

Healthcare professionals can also use image recognition to identify and track patterns in tumors or other anomalies in medical images, leading to more accurate diagnoses and treatment planning. Image recognition is most commonly used in medical diagnoses across the radiology, ophthalmology and pathology fields.

Retail

Image recognition benefits the retail industry in a variety of ways, particularly when it comes to task management. By enabling faster and more accurate product identification, image recognition quickly retrieves relevant product information like pricing and availability.

For example, if Pepsico inputs photos of its cooler doors and shelves full of product, an image recognition system would be able to identify every bottle or case of Pepsi that it recognizes. This then allows the machine to learn more specifics about that object using deep learning. So it can learn and recognize that a given box contains 12 cherry-flavored Pepsis.

Image recognition is also helpful in shelf monitoring, inventory management and customer behavior analysis. By capturing images of store shelves and continuously monitoring their contents down to the individual product, companies can optimize their ordering process, records keeping and understanding of what products are selling to whom, and when.

An example of this is FORM’s GoSpotCheck product, which allows companies to collect more insight into their products at every step of the supply chain — from how they’re stored during shipping to their position on shelves — with its image recognition software.

Security

Image recognition is used in security systems for surveillance and monitoring purposes. It can detect and track objects, people or suspicious activity in real time, enhancing security measures in public spaces, corporate buildings and airports to prevent incidents from happening.

“Physical security is under a major transformation,” Khanna said. “Most of the forward-looking physical security teams are adopting AI to make operations more proactive.”

Ambient.ai does this by integrating directly with security cameras and monitoring all the footage in real time to detect suspicious activity and threats.

This requires using computer vision and image recognition to detect all the objects in a given scene, all the interactions between those objects and understand them within the context of the scene, Khanna explained. “Is a person carrying a knife suspicious or interesting? Not in a kitchen. But in a corporate lobby of an office that’s very interesting, very suspicious. So where things are happening, when they are happening is actually extremely important for applying computer vision.”

Challenges of Image Recognition

While image recognition has become an essential tool across various industries, it still has some downsides to consider.

Changes in Lighting

A number of variables can impact the performance of image recognition systems, including shifts in brightness and shadowing. Both bright spots and excessive shadows can prevent systems from discerning key details that allow them to identify objects. Using training data with a wide range of light conditions can address this issue.

Sensitivity to Training Data

Training data sets that aren’t diverse enough can reduce an image recognition system’s ability to perform well in different contexts. For example, only using high-quality images means a system will adapt poorly to low-quality images, and vice versa. Transfer learning is a technique that can help a model widely apply learned knowledge to new data sets.

Cybersecurity Threats

Bad actors can use a method called data poisoning to infect a training data set, affecting an image recognition model during the training process. Adversarial attacks are another approach that hackers may use to corrupt a model’s training data. Teams can implement adversarial training and other security measures to combat these attacks.

Limited Ability to Understand Context

While image recognition systems may excel at identifying specific objects, they may not always pick up on context and how that shapes the relationships between objects. To get better results, teams can use more complex machine learning algorithms and train them on larger volumes of diverse data.

Privacy Concerns Around Data Collection

Image recognition systems can compile loads of visual data that can be considered highly sensitive, raising ethical questions. Do companies need user permission to collect this data? And what do they do with this data? These conversations may ultimately determine how image recognition technology is applied.

Image Recognition vs. Computer Vision

Image recognition is a subset of computer vision, which is a broader field of artificial intelligence that trains computers to see, interpret and understand visual information from images or videos.

Computer vision involves several “sub-problems” or “tasks,” as Khanna put it — including image classification, or assigning a single label to an image such as “car” or “apple.” This leads to more complicated tasks like object detection, which involves not only identifying the many things within an image (cars, animals, trees and so on), but also localizing them within the scene. And then there’s scene segmentation, where a machine classifies every pixel of an image or video and identifies what object is there, allowing for easier identification of amorphous objects like bushes, the sky or walls.

Image recognition is yet another task within computer vision. Its algorithms are designed to analyze the content of an image and classify it into specific categories or labels, which can then be put to use. In many cases, a lot of the technology used today would not even be possible without image recognition and, by extension, computer vision.

For example, to apply augmented reality (AR) a machine must first understand all of the objects in a scene, both in terms of what they are and where they are in relation to each other. If the machine cannot adequately perceive its environment, there’s no way it can apply AR on top of it. The same goes for self-driving cars and autonomous mobile robots.

“Computer vision is not something that optimizes things or makes things better — it is the thing,” Khanna said. “We are entering an era of products that would not exist without the latest advancements in computer vision.”

Image Recognition vs. Object Detection

Image recognition and object detection are both related to computer vision, but they each have their own distinct differences.

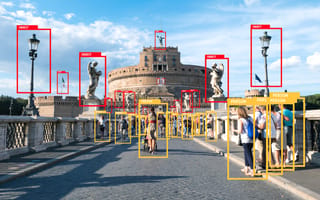

While image recognition identifies and categorizes objects within an image or video, assigning classification labels to each of them, object detection finds both the instances and locations of objects in an image using bounding boxes, or rectangles that surround an image, to show an object’s specific position and dimensions.

Object detection is generally more complex than image recognition, as it requires both identifying the objects present in an image or video and localizing them, along with determining their size and orientation — all of which is made easier with deep learning.

“The concept of deep learning is that you build a system that can learn to make predictions, and get better at making those predictions over time, through the use of statistical models and algorithms,” Jeff Wrona, the VP of product and image recognition at FORM, told Built In. “Object detection is really the process of drawing the box around the things that you care about to narrow down the pixels that I would want to focus on in order to complete deep learning and train the model to get really precise.”

Frequently Asked Questions

What is image recognition?

Image recognition refers to the ability of computers to “see” and interpret visual information similarly to humans. It allows computers to identify and classify specific objects, people, places and other entities within images and videos.

Is image recognition a type of AI?

Image recognition is an application of artificial intelligence. Diving deeper, it can be considered a subset of computer vision.

Is image recognition the same as computer vision?

No, image recognition is actually a subset of computer vision. Computer vision refers more broadly to the field of using AI to identify and interpret visual data in images and videos, while image recognition refers more specifically to the task of identifying objects and then classifying them accordingly.

What is an example of image recognition AI?

A common example of image recognition at work can be found in self-driving cars. These cars use cameras and sensors to detect various objects, and AI models can then classify these objects into stop signs, people, dogs, cars and other categories.

Does ChatGPT have image recognition?

Yes, ChatGPT has the ability to analyze images and identify particular objects. It can then participate in conversations about those images, providing insights and answering questions through either text or speech.