There’s no definitive account of why the first version of Google Glass failed to win consumer acceptance. Some argue it fell prey to its own hype, marketed as a finished product before the kinks were worked out. Others felt it was unfashionable — a missed target falling somewhere between haute couture cyborg and lightweight Robocop.

Whatever the reason, a lot has changed since 2014, and if the world wasn’t ready for Glass at the time — or Glass wasn’t ready for the world — Jason Cottrell, CEO of the software studio Myplanet, believes newer-model augmented reality (AR) glasses are poised for consumer acceptance, especially among Gen Xers and Millennials.

Not only have the UX patterns improved and companies grown wise to the dangers of bombarding users with notifications, Cottrell said, but the glasses look more stylish — think Kate Spade, Dolce & Gabbana or Warby Parker, not scheming sci-fi villain.

“I suspect we’ll see a Samsung — or a Google or an Amazon or Apple — introducing a device like this soon, with a much larger mainstream push,” Cottrell said. “I think that, actually, there is a demographic now that would be receptive to it.”

Myplanet, a team largely comprising white-label AI developers, is at the forefront of several projects to bring augmented reality to wearables, including a recent partnership with North, a company Google acquired in June of 2020, which historically focused on everyday prescription smart glasses such as the Focals series.

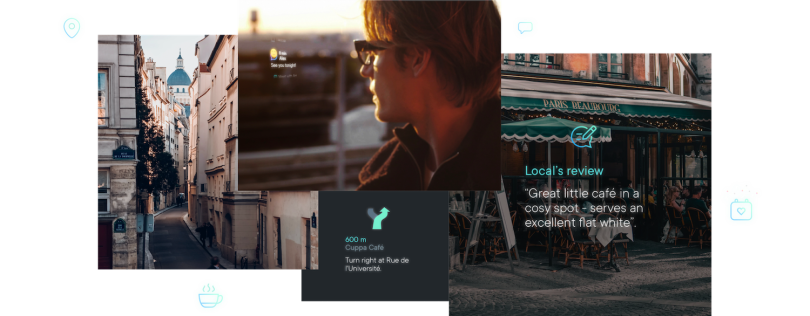

Prior to the acquisition, Myplanet partnered with North and Amazon to enhance the Focals user experience by making location central to the type of visual information users encountered in their fields of vision. Through Alexa’s conversational interface and a wearable ring, the lo-fi prototype let travelers take sightseeing tours and enable augmented-reality features — holograms that appear in the retinal display, providing relevant information at the user’s request.

“I suspect we’ll see a Samsung — or a Google or an Amazon or Apple — introducing a device like this soon, with a much larger mainstream push.”

A tourist in Paris, for instance, might access a maps feature to find cultural landmarks, activate a translation tool to learn the French word for coffee or use a camera to capture the requisite shot of the steps leading to the Basilica of the Sacré-Cœur— but they remain in control, engaging experiences through voice cues.

A case study on Myplanet’s website is telling: “Each conversation string was carefully written to guide the user through the tour with ease, relying on simple yes/no responses that address the realities of noisy urban streets and the desire to remain inconspicuous as a traveler,” it states. “Additional insights from our AR research determined the need for an at-a-glance approach to info display. Too much text to read took users out of the moment, making them look and feel conspicuous as foreigners.”

The study also notes that “universal translation — an early concept we explored — was quickly set aside in favor of more practical, context-relevant scenarios.”

As Cottrell told me: “We’re not projecting a life-size 3D rendering of the Eiffel Tower, but we’re helping to way-find and navigate our daily interactions, and that’s where these glasses can do well.”

New Forms Led to New Functions — and Those Functions Could Revive Interest in AR Wearables

Any designer worth their salt knows the importance of form, and many can probably recite from memory architect Louis Sullivan’s famous maxim, “form ever follows function.” But as Sullivan’s protégé Frank Llyod Wright pointed out, the meaning of Modernism’s assumed mantra is less about precedence than it is about interdependence: “Form follows function — that has been misunderstood. Form and function should be one, joined in a spiritual union,” Wright explained.

And this, more than anything, may be why the consumer appetite for AR is growing, Cottrell told me. As the hardware supporting AR has moved from bulky headsets and goggles to new form factors, like apps and smartphones, the technology has undergone more than just an aesthetic shift. It has changed its capabilities.

“Over the last couple of years, as AR has become more available on devices we’re already using, it has provided an interesting new intersection point where the lift to understand is much easier,” Cottrell said. “I don’t have to change my mental model or get another device and go through that learning curve.”

Consumer exposure to AR has also smoothed the path to adoption. Mobile apps like Ikea Place, for instance, let customers view a product catalog and use an AR viewer to see how furnishings will look in their living spaces by overlaying flat graphics onto a camera view of the environment.

“We’re not projecting a life-size 3D rendering of the Eiffel Tower, but we’re helping to way-find and navigate our daily interactions, and that’s where these glasses can do well.”

Snap’s Snapchat lenses, Instagram’s face filters and a Google Maps product discovery feature have similarly exposed consumers to AR’s image-grafting capabilities on smartphones, Shanil Patel, a product designer at Myplanet noted on Medium. In fact, several years ago, a Nike social campaign recreating Michael Jordan’s iconic free-throw-line dunk helped sell out a release of Air Jordan 3 Tinkers in exactly 23 minutes.

While early AR use cases focused on gaming and entertainment, commercial applications are expanding: A Nasdaq report estimated the market for extended reality (or XR, which includes VR, AR and MR) at $18 billion. Today, architects and engineers use AR to overlay medical equipment in hospital rooms and gather feedback from end users. Technicians use it for complex repairs of car motors and diagnostic equipment. And Inc. reports use cases ranging from medical training applications for students studying human anatomy to logistics tools for optimizing order fulfillment.

Just two weeks ago, Microsoft won a $22 billion contract to supply HoloLens goggles to 120,000 soldiers in the U.S. Army. The program will “let commanders project information onto a visor in front of a soldier’s face, and would include other features such as night vision,” according to a report from Bloomberg. This adds to a list of training applications companies like Microsoft and Magic Leap are promoting to customers.

“Microsoft has carved out good, real-world use cases for remote training for specialty equipment, and that’s where we’ve been starting to build customer use cases as well,” Cottrell said.

But while Microsoft is focused on promoting the value of headsets to companies and public-sector agencies, the consumer market may be where the most notable disruption could take place.

The Ecosystem Effect

Interestingly, the flash point that may push smart glasses and other AR-powered wearables toward broader appeal, Cottrell told me, is improved 3D visualization in smartphones.

The iPhone 12’s introduction of LiDAR, an acronym for light detection and ranging, is one of the most recent and striking examples of how AR images are becoming increasingly indistinguishable from reality as observed through the naked eye. Light pulses emitted by the phone, according to a report in RCRWireless News, detect the distances between objects within a space, making graphic overlays more dimensional and lifelike. You can grasp a holographic image of, say, an artificially rendered tube of toothpaste and see it disappear from view, as though concealed by your hand.

Combined with smart watches and other connected devices, Cottrell said, LiDAR technology — which is used in autonomous vehicles — may inspire an ecosystem effect, relieving the burden on smart glasses to support so much data-greedy software.

“If you’re carrying around, essentially, these LiDAR units, it supports the ability, eventually, for better AR glass products,” Cottrell said. “The more we can offload the requirements for app situational awareness, control and rendering from the glasses, the more we can make them smaller, make the battery life run longer and introduce them sooner.”

There is also some quantitative evidence to support Cottrell’s claims that consumer attitudes toward AR are changing. In October of 2020, and again in May, Myplanet surveyed 500 U.S. residents ages 18 to 65 using Google Consumer Surveys to assess consumer comfort with various augmented-reality features — from e-commerce tools to translation software and hairstyle apps.

“The more we can offload the requirements for app situational awareness, control and rendering from the glasses, the more we can make them smaller, make the battery life run longer and introduce them sooner.”

The results, outlined in the “Robots Among Us” report, are intriguing. Over six months, consumer comfort with augmented-reality smartphones increased from 25 to 35 percent. While comfort with smart glasses remained the same over that period (a 25-percent comfort level), the glasses fared similar to other technologies beginning to gain mainstream appeal, such as home assistants, vehicle autopilot and self-parking valets.

“These mid-range scores are really interesting. This is where we see things poised for more adoption over the coming years,” Cottrell said.

Even more interesting, individuals ages 35 to 44 reported greater comfort with smart glasses than cohorts of younger respondents — perhaps because their vision has deteriorated to the point that they’re ready for prescription lenses.

Designers Are the Gatekeepers of User Control and Privacy

The breakdown of typical notions of subject-object separation may be a stumbling block to adoption, though. Back in 2012, a Google developer preview showing a day in the life of a Glass-wearing New Yorker effectively captured many of Glass’ augmented-reality features — much of what you’d find on a smartphone — but there was almost no distinction between the interface and the material world. Yes, Glass had physical lenses, but, as with standard eyeglasses, the wearer isn’t conscious of them. They are more a portal than a thing.

While the ad humanizes Glass’s AR features, it also betrays, in miniature, the essence of a gripe Cottrell said some users levied against early edition smart glasses. Being in two worlds at once — the physical world and the world of holograms — can feel disorienting and, without effective guardrails to regulate information flowing in and out of the viewport, intrusive. As Google’s development team acknowledged in its 2012 demo reel, interrupting users during intimate life moments is a genuine concern. When a parent is peering down on a cooing baby in their bassinet, they want to see their child’s adoring eyes, not a notification.

“Think of it more like the early stages of home smart speakers, where they weren’t pinging notifications into your house, you’d have to trigger the thing, and it is clearly at your command.”

Protecting users’ control over their AR interactions — making sure smart glasses do not become easy targets for continuous ad streams or unwanted surveillance — is one area where Cottrell believes designers could play an important role in the technology’s development.

“Think of it more like the early stages of home smart speakers, where they weren’t pinging notifications into your house, you’d have to trigger the thing, and it is clearly at your command. We’ve been taking the same approach in some of our early work on smart glasses for the same reason: It’s less intrusive,” Cottrell said.

For now, he advises executives to take a measured approach to R&D.

“These products aren’t fully on the market yet. We did the focused collaboration with North and Amazon as a way of understanding it and demonstrating the benefits, but I wouldn’t be rushing our customers, at this point, to stake out a claim. Targeted understanding of how this might apply for your workforce, or as part of a consumer application, is good.”

Still, the AR landscape is shifting quickly and Cottrell said UX designers are wise to stay ahead of the curve. Exploring how AR could be integrated into existing smartphone workflows and growing acquainted with prebuilt tools like Metaverse and Google’s ARCore, a JavaScript-based AR engine, are good first steps. Thinking about the evolution of text-based user flows toward those guided by voice recognition software, he added, is another way designers can ready themselves for the emergence of AR in wearables.

A second wave of smart glasses is not so far-fetched, maybe even imminent, he told me. As image fidelity improves, smart glasses start to look more in vogue and voice-activated way-finding and Wiki-like informational features prove their value, smart glasses could indeed see a second life.